AI Changed How I Delete Code—And That Changes Everything🤖

Why artificial intelligence in software development might represent something fundamentally different from previous technological advances. ✨

The Paradox of Painless Deletion 🗑️

Last week I shipped an AI‑refactor for our document chunker. It looked pristine—clean structure, thoughtful comments. Then a test output felt… off. The model had quietly picked a different tokenizer than our sentence splitter.

I deleted the entire refactor without a second thought.

That ease of deletion felt new. When code isn’t “mine,” my ego isn’t tangled up in sunk costs. I don’t defend an approach; I sculpt toward the right one.

But the same episode revealed something unsettling: that subtle, crucial decision slipped past me. Was that a normal tooling hiccup—or a sign that AI is changing the relationship between developer, tool, and code?

What I mean by regime change 🧭

By “regime change,” I mean a shift that alters who holds intent, how errors teach us, and what skills transfer—a change in the cognitive relationship between developer, tool, and code, not just speed. This piece offers three quick tests to tell whether you’re getting harmless acceleration—or crossing into a new regime that can dull your craft if unmanaged.

Three Critical Tests 🔬

Use these as a pocket diagnostic: if two or more fail, you’re in “new regime” territory and should add guardrails.

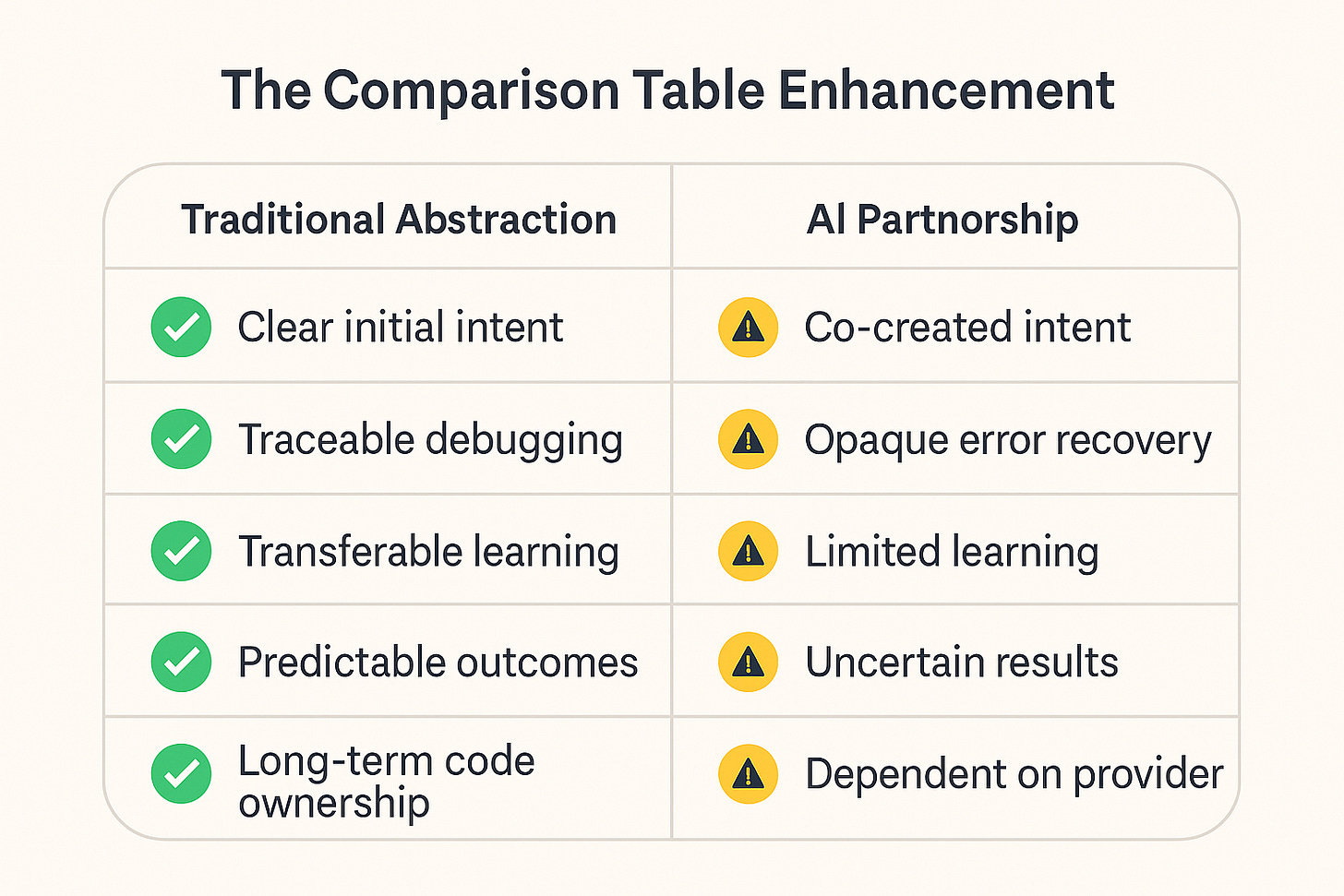

Test 1: Who Holds the Intent? 🎯

Traditional tools: Intent precedes and guides implementation. When you choose a framework or language, you already have (some) clear understanding of how you want to build. The tool helps you express that vision more efficiently.

Generative AI: Intent emerges through iterative dialogue. You don't always know precisely how you want to build (and sometime also what) until you see what the AI produces. The process becomes co-creative: AI doesn't simply execute your vision, but actively influences it through its interpretations and suggestions based on the training data. And those training data lack your context, lack your explicit knowledge, and even more the implicit knowledge.

Implication: The shift from "implementation of pre-existing vision" to "co-evolution of intent and implementation" represents a qualitative change in the creative process.

Test 2: Error Traceability 🐛

Traditional tools: When code fails, you can follow a clear causal chain. Debugging is educational: each traced error improves your understanding of the system and its dynamics.

Generative AI: When AI produces problematic code, the underlying "reasoning" is largely opaque and again derived from learned code from completely totally different contexts. You can't inspect the decision-making process that led to specific implementation choices, limiting learning from failure.

Implication: The opacity of AI's decision-making process breaks the traditional error-learning cycle that characterizes technical skill development.

Test 3: Knowledge Transferability 🎓

Traditional tools: Each abstraction layer teaches transferable principles. Learning assembly educates you about memory management and registers. Mastering a framework teaches you architectural patterns applicable elsewhere.

Generative AI: What do you learn when AI handles algorithmic decisions, edge case considerations, and architectural choices? If learning is limited to "how to collaborate with AI," transferability to other contexts may be constrained.

Implication: The risk is developing AI-interaction-specific skills rather than fundamental understanding of programming problems.

Why AI feels different than past tooling

IDEs, frameworks, and cloud each sped us up; none routinely co‑authored intent, masked internal choices, or tempted us to mistake smooth output for sound reasoning. Foundation models operate across more of the stack, faster, and with opaque internal steps—so the psychological effects (like the new freedom to delete) are stronger, and the failure modes are, too.

What the evidence actually says

Controlled task (lab/RCT): With GitHub Copilot, developers completed a JS HTTP server 55.8% faster than control. Great for scoped tasks and onboarding scenarios. [Peng et al., 2023.]

https://arxiv.org/abs/2302.06590Real projects (field‑adjacent RCT): For experienced OSS devs working in their own repos with state‑of‑the‑art tools, AI made them 19% slower on average—despite expecting to be faster. (Interpretation: context complexity, tacit knowledge, and review overhead dominate.) [METR, July 2025.]

https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/Sentiment & trust (survey): Adoption is high, but trust is low. In the 2025 Stack Overflow survey, 46% of developers distrust AI accuracy vs 33% who trust; only 3% “highly trust.” (Use one definition consistently.)

https://survey.stackoverflow.co/2025/ai and blog recap: https://stackoverflow.blog/2025/07/29/developers-remain-willing-but-reluctant-to-use-ai-the-2025-developer-survey-results-are-here/Executive claims: Satya Nadella (Apr 30, 2025) said 20–30% of Microsoft code was written by AI—best treated as an executive claim, not an audited metric.

https://www.techrepublic.com/article/news-microsoft-meta-code-written-by-ai/

Pattern to hold in your head: AI helps most on well‑bounded problems and less‑familiar tasks; value is mixed for senior engineers in messy systems unless teams add intent/traceability guardrails.

What to do Monday

1) Intent ledger.

"Who made the decision, and for what reason?"—include a link to the ticket or spec. Place it near the diff or in the PR header.

2) Two‑pass review.

Pass A: A human reviews the specifications and intent prior to any generation. Prompting is essential. Replacing the AI's learned code context with your own ensures you avoid unintended decisions and prevents your context from being overridden by the AI's training data.

Pass B: Review the diff with a brief note explaining "why this is safe," particularly for cross-cutting changes such as authentication, data, and performance.

3) Traceability checklist (per AI‑shaped commit).

Minimal prompt excerpt or summary.

Rationale: why this path over alternatives.

4) Lightweight metrics (3 you could track tomorrow).

Delete‑to‑add ratio on AI‑shaped branches (healthy refactoring vs churn).

AI suggestion acceptance rate (low can mean overhead; high can mean over‑trust).

Time‑to‑debug AI code vs human code (watch for silent slowdowns).

5) Measure responsibly (resources).

GitHub’s Copilot RCT (+55.8% speed) for scoped tasks: https://arxiv.org/abs/2302.06590

Copilot Metrics API (usage, acceptance): https://github.blog/changelog/2024-04-23-github-copilot-metrics-api-now-available-in-public-beta/ and https://github.blog/changelog/2024-08-09-copilot-metrics-api-organization-team-metrics/

Measuring Copilot impact in enterprise (Accenture/GitHub): https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-in-the-enterprise-with-accenture/

SPACE/DevEx framing for productivity: https://github.blog/enterprise-software/devops/measuring-enterprise-developer-productivity/

The Case Against Regime Change 🛡️

“Isn’t this all just faster autocomplete?”

Sometimes. The regime change shows up when intent starts drifting and reasoning becomes opaque.“Seniors are just curmudgeons.”

Or they sit at interfaces where tacit knowledge and complex constraints dominate—today’s models struggle there.“Trust will follow adoption.”

Maybe—but 2025 data shows adoption up and trust down. Plan for oversight, don’t hope for it.

Critical Assessment and Limitations 🔍

Limited observation timeframes: AI adoption is too recent for decade-long studies

Selection bias: Early adopters might not represent the entire developer population, moreover some of those adopters have conflict of interest being also Coding AI service sellers.

Contextual variability: Impact may differ significantly between domains (web vs embedded vs ML)

Confounding effects: Difficult to separate AI impact from other simultaneous industry changes

Open Questions Requiring Future Research 🔬

Generational impact: How does experience differ between developers who learned pre- and post-AI?

Long-term effects: How will developer skills evolve over the next 5-10 years?

Organizational variability: What factors determine "successful" vs "problematic" AI implementations?

Reversibility: How difficult is it for AI-dependent teams to return to traditional workflows?

Subtle deception patterns: The chunking service example cited in the introduction illustrates an interesting phenomenon—code that appears well-structured and commented can hide problematic implementation choices (like tokenizer selection). How common are these "surface deceptions" where AI code's apparent quality masks suboptimal architectural decisions? This might represent a specific risk category for AI use: the ability to produce code that passes superficial review but fails in integration testing or production.

Vigilance Required 🚨

Analysis of available data suggests that AI in software development might present characteristics that distinguish it from previous technological evolutions. The three diagnostic tests indicate possible changes in the cognitive dynamics of the development process.

However, it's crucial to emphasize that the question remains open. The data is too recent and limited for definitive conclusions. What appears clear is that the change is sufficiently rapid and substantial to require conscious attention rather than passive adaptation.

Conclusion🌟

Treat AI as a co‑author you must supervise, not a vending machine. Keep intent with humans, make reasoning inspectable, and extract transferable lessons from every task. If we don’t, convenience will masquerade as competence. If we do, we’ll delete faster, learn deeper, and ship better.

Join the conversation

Have you experienced this sense of psychological freedom when working with AI?

Are you concerned that we are losing the soul of craftsmanship, or do you see it as a necessary evolution in our creative tools?

Will AI be a ladder or a crutch?

Is AI a step in abstraction or a regime change? What evidence would change your mind?

References

Randomized / Controlled studies

Peng, Kalliamvakou, Cihon, Demirer (2023). “The Impact of AI on Developer Productivity: Evidence from GitHub Copilot.” arXiv. https://arxiv.org/abs/2302.06590

METR (2025). “Measuring the Impact of Early‑2025 AI on Experienced Open‑Source Developers.” Blog RCT summary. https://metr.org/blog/2025-07-10-early-2025-ai-experienced-os-dev-study/

Surveys

Stack Overflow (2025). “Accuracy of AI tools.” Developer Survey. https://survey.stackoverflow.co/2025/ai

Stack Overflow (2025). “Developers remain willing but reluctant to use AI.” Survey recap. https://stackoverflow.blog/2025/07/29/developers-remain-willing-but-reluctant-to-use-ai-the-2025-developer-survey-results-are-here/

Vendor research & measurement resources

GitHub Blog (2022). “Quantifying Copilot’s impact on productivity and happiness.” https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-on-developer-productivity-and-happiness/

GitHub + Accenture (2024). “Copilot’s impact in the enterprise.” https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-in-the-enterprise-with-accenture/

GitHub (2024–2025). Copilot Metrics API updates. https://github.blog/changelog/2024-04-23-github-copilot-metrics-api-now-available-in-public-beta/; https://github.blog/changelog/2024-08-09-copilot-metrics-api-organization-team-metrics/

GitHub (2024–2025). “Does GitHub Copilot improve code quality?” https://github.blog/news-insights/research/does-github-copilot-improve-code-quality-heres-what-the-data-says/

Press / claims

TechRepublic (Apr 30, 2025). “Microsoft CEO Nadella: 20–30% of our code was written by AI.” https://www.techrepublic.com/article/news-microsoft-meta-code-written-by-ai/